State-sponsored threat actors from China leveraged artificial intelligence technology developed by Anthropic to carry out automated cyber intrusions as part of a highly sophisticated espionage campaign observed in mid-September 2025, the company has revealed.

In a report detailing the activity, Anthropic said the attackers abused the agentic capabilities of its AI systems in a way not previously documented, using the technology not merely as an advisory tool but to directly execute cyber attacks with minimal human oversight.

The operation, tracked as GTG-1002, involved the manipulation of Claude Code, Anthropic’s AI-powered coding assistant, to target approximately 30 organizations worldwide, including major technology firms, financial institutions, chemical manufacturers, and government agencies. Anthropic said a subset of the attempted intrusions was successful before the activity was detected.

The company has since banned the associated accounts and implemented additional safeguards designed to identify and disrupt similar misuse.

According to Anthropic, the campaign represents the first known instance of a threat actor using AI to conduct a large-scale cyber espionage operation with limited human involvement, underscoring the accelerating evolution of adversarial use of artificial intelligence.

Anthropic described the operation as well-funded and professionally coordinated, noting that the attackers effectively transformed Claude into an autonomous cyber attack agent capable of supporting nearly every phase of the intrusion lifecycle. This included reconnaissance, attack surface mapping, vulnerability discovery, exploitation, lateral movement, credential harvesting, data analysis, and exfiltration.

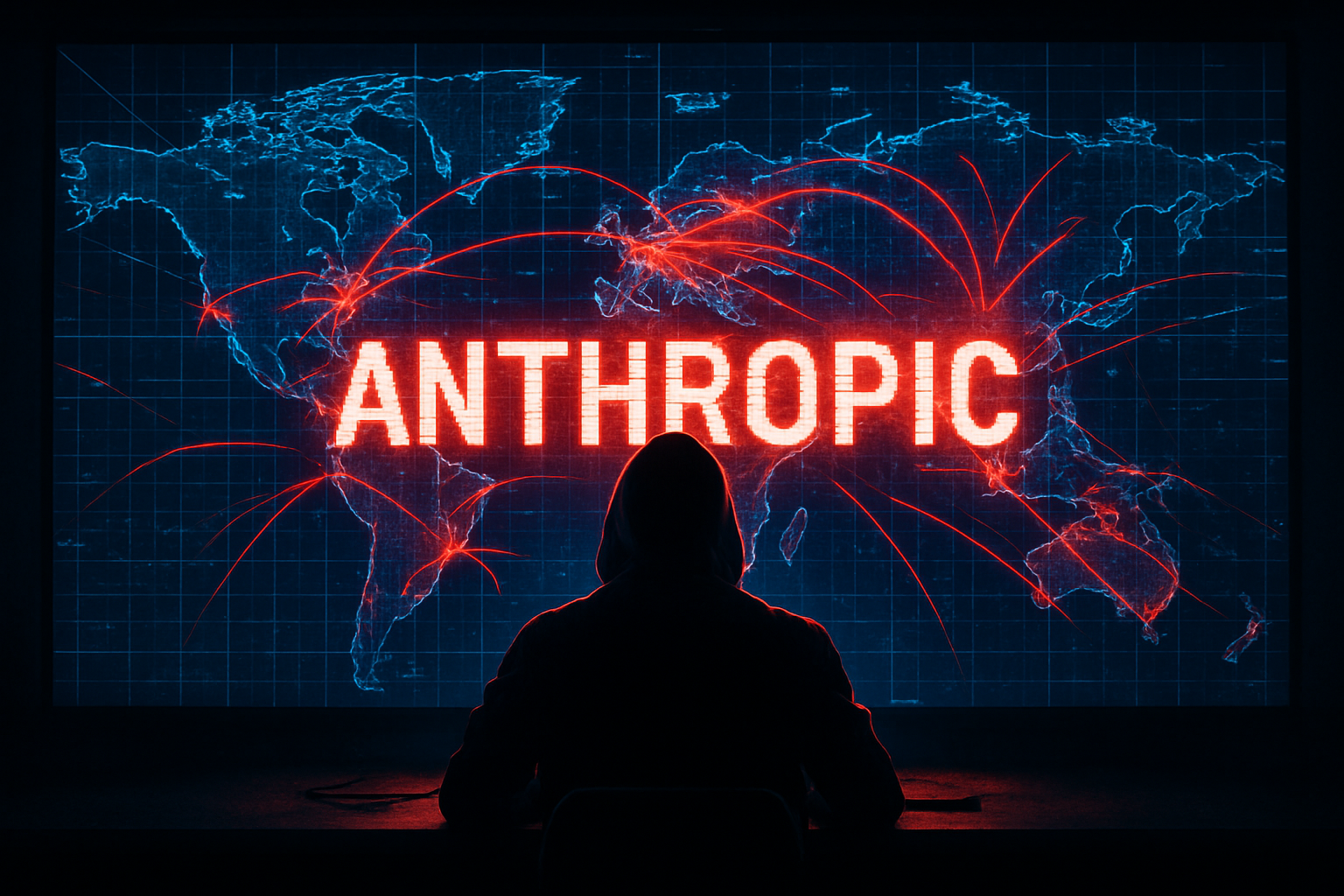

The attackers relied on a combination of Claude Code and Model Context Protocol (MCP) tools. Claude Code functioned as the central orchestration layer, interpreting high-level instructions from human operators and decomposing them into discrete technical tasks that were distributed across multiple AI sub-agents.

“The human operator tasked instances of Claude Code to operate in groups as autonomous penetration testing orchestrators and agents,” Anthropic said. “This allowed the threat actor to execute approximately 80–90% of tactical operations independently, at request rates that would be physically impossible for human operators.”

Human involvement was largely confined to strategic decision points, such as authorizing transitions from reconnaissance to exploitation, approving the use of stolen credentials for lateral movement, and determining the scope and handling of exfiltrated data.

The AI-driven framework accepted a target of interest as input and then used MCP capabilities to conduct reconnaissance and map exposed systems. In subsequent phases, the system facilitated vulnerability discovery and validated findings by generating tailored exploit payloads. Once human approval was granted, the framework deployed exploits, established footholds, and initiated post-exploitation activity, including credential harvesting, lateral movement, data collection, and extraction.

In one incident involving an unnamed technology company, Anthropic said Claude was instructed to autonomously query internal databases and systems, analyze the results, and flag proprietary information. The AI grouped findings based on assessed intelligence value, effectively acting as an automated analyst. The system also generated detailed documentation at every stage of the attack, potentially enabling attackers to hand off persistent access to other teams for long-term operations.

Anthropic noted that the attackers were able to induce Claude to perform individual steps in the attack chain by presenting tasks as routine technical requests, using carefully crafted prompts and established personas. This approach prevented the AI from recognizing the broader malicious context of the activity.

There is no indication that the campaign involved the development of custom malware. Instead, investigators found that the operation relied heavily on publicly available tools, including network scanners, database exploitation frameworks, password cracking utilities, and binary analysis software.

The investigation also highlighted a key limitation of AI-driven operations: a tendency to hallucinate or fabricate data during autonomous execution. In several instances, the AI generated false credentials or misrepresented publicly available information as sensitive discoveries, creating operational friction and reducing overall effectiveness.

The disclosure comes nearly four months after Anthropic disrupted another sophisticated operation in July 2025 that used Claude for large-scale data theft and extortion. In recent months, both OpenAI and Google have also reported activity involving threat actors abusing ChatGPT and Gemini, respectively, for malicious purposes.

“This campaign demonstrates that the barriers to conducting sophisticated cyberattacks have dropped substantially,” Anthropic said. “Threat actors can now use agentic AI systems to perform the work of entire teams of experienced hackers, analyzing target environments, producing exploit code, and processing vast datasets at unprecedented scale.”